Archive

Operating Systems Notes

Here are are some rough notes I put together as part of revision for a uni course. They are heavily based on the course lecture notes by Kevin Elphinstone and Leonid Ryzhyk. All diagrams are sourced from those lecture notes, some of which are in turn from the text book, A. Tannenbaum, Modern Operating Systems.

OS Overview

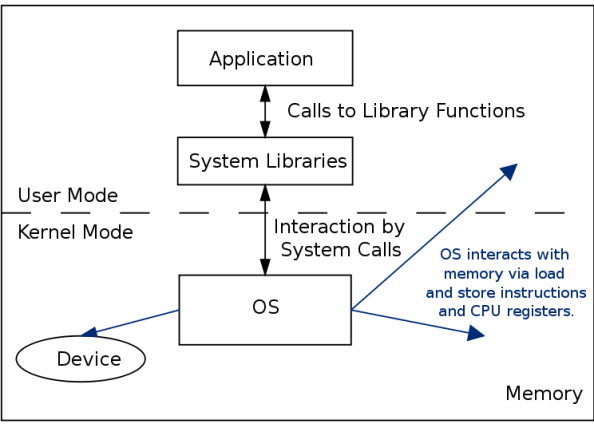

- The OS hides the details of the hardware and provides an abstraction for user code.

- The OS is responsible for allocating resources (cpu, disk, memory…) to users and processes. It must ensure no starvation, progress, and that the allocation is efficient.

- The kernel is the portion of the OS running in privileged mode (hardware must have some support for privileged mode for the OS to be able to enforce the user and kernel mode distinction).

- When the hardware is in privileged mode all instructions and registers are available. When the hardware is in user mode only a limited sets of instructions, registers and memory locations are available. For instance when in user mode, it is not possible to disable interrupts because otherwise a user could ensure that the OS never gets control again..

- A process is a instance of a program in execution.

- A process in memory usually has at least three segments. The text segment for the program code (instructions), a data segment called the heap for global variables and dynamically allocated memory, and a stack segment used for function calls.

- A process also has an execution context which includes registers in use, program counter, stack pointer, etc.

- In a Monolithic OS different parts of the OS like processor allocation, memory management, devices and file systems are not strictly separated into separate layers but rather intertwined, although usually some degree of grouping and separation of components is achieved.

System Calls

- The system call interface represents the abstract machine provided by the operating system.

- man syscalls

- Syscall numbers

- hardware syscall instruction

Processes and Threads

- Processes can contain one or more threads. These threads are units of execution, and always belong to a process.

- A process can terminate by,

- normal exit (voluntary), usually returning EXIT_SUCCESS

- error exit (voluntary), usually returning EXIT_FAILURE

- fatal error (involuntary), eg. segfault, divide by zero error

- killed by another process (involuntary), eg. pkill (sending the SIGTERM signal)

- Thread states

- Running to ready could be from a voluntary yield, or end of time allocated by the CPU, so the scheduler moves the thread to the Ready queue to give another process a go.

- Running to blocked could be from a calling a syscall and waiting for the response, waiting on a device, waiting for a timer, waiting for some resource to become available, etc.

- The scheduler (also called the dispatcher) decides which thread to pick from the read queue to run.

- The idea is that a process with multiple threads can still make process when a single thread blocks, as if one thread blocks, the others can still be working. In more recent times, it allows a process to have threads running on multiple CPU’s on modern multi-core machines.

- The other model is finite-state machine where syscall’s don’t block but instead allow the program to continue running and then send the process an interrupt when the syscall has been dealt with. This is not as easy to program though.

During the system initialisation background processes (called daemon’s on linux, service’s on windows) and foreground processes can be started.

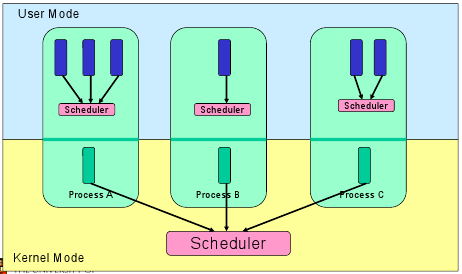

Threads Implementation

- Kernel-threads vs. User-level threads.

- User-level threads

- Thread management is handled by the process (usually in a runtime support library)

- The advantages are,

- switching threads at user-level is much cheaper than the OS doing a context switch

- you can tune your user-level thread scheduler, and not just use the OS provided one

- no OS support for threads needed

- The disadvantages are,

- your threads must yield(), you can’t really on an interrupt to give your scheduler control again (this is known as co-operative multithreading)

- cannot take advantage of multiple CPU’s

- if one of your user-level threads gets blocks by the OS, your whole process gets blocked (because the OS only sees one thread running)

- Kernel-level Threads

- Thread management is done by the kernel through system calls

- The downside is thread management (creation, destruction, blocking and unblocking threads) requires kernel entry and exit, which is expensive

- The upside is we now have preemptive multithreading (the OS just takes control when it wants to schedule another process, so no need to rely on the thread to yield), can take advantage of a multiprocessor, and individual threads can have isolated blocking.

- A Thread switch can happen in between execution of any two instructions, at the same time it must be transparent to the threads involved in the switch. So the OS must save the state of the thread such as the program counter (instruction pointer), registers, etc. as the thread context. The switch between threads by the OS is called a context switch.

Concurrency

- A race condition occurs when the computation depends on the relative speed of two or more processes.

- Dealing with race conditions,

- Mutual exclusion

- Identify the shared variables,

- identify the code sections which access these variables (critical region)

- and use some kind of mutual exclusion (such as a lock) to ensure that at most only one process can enter a critical region at a time.

- Lock-free data structures

- Allow the concurrent access to shared variables, but design data structures to be designed for concurrent access

- Message-based communication

- Eliminate shared variables and instead use communication and synchronisation between processes.

- Mutual exclusion

- Mutual Exclusion – enter_region() and leave_region().

- Hardware usually provides some mutual exclusion support by providing an atomic test and set instruction.

- A uniprocessor system runs one thread at a time, so concurrency arises from preemptive scheduling. But with multiprocessor machines concurrency also arises from running code in parallel using shared memory.

- The problem with the approach to mutual exclusion so far is that when process B is blocked, it just sits in a loop waiting for the critical region to become available. Can fix this by using sleep and wakeup. We use a mutex lock for this.

- Blocking locks can only be implemented in the kernel, but can be accessed by user-level processes by system calls.

- A mutex allows at most one process to use a resource, a semaphore allows at most N processes.

- Producer-consumer problem

- Producer can sleep when buffer is full, and wake up when space available.

- Consumer can sleep when buffer is empty and wake up when some items available.

- Can do using semaphores or condition variables.

- Monitors are set up to only allow one process/thread to operate inside it at once, with extra requests put in a queue. Implemented by the compiler.

- Condition variables. cv_create, cv_destroy, cv_wait, cv_signal, cv_broadcast

- Dining philosophers problem. Need to prevent deadlock.

Deadlock

- A set of processes is deadlocked if each process in the set is waiting for an event that only another process in the set can cause.

- Four conditions for deadlock:

- Mutual exclusion condition (device not shareable amongst threads)

- Hold and wait condition (a resource can be held, but then block awaiting more resources)

- Can attack this by requiring all process ask for all resources at the start, and only start if they are granted.

- No preemption condition – previously granted resources cannot be forcibly taken away

- Circular wait condition

- Always request resources in the same order

- Dealing with deadlock:

- ignore the problem

- detect and recover

- dynamic avoidance (careful resource allocation) – we require some information in advance like which resources and how many will be needed before process starts.

- Bankers algorithm

- prevention (negate one of the four conditions for deadlock)

- Starvation is slightly different to deadlock as the system can be making progress, but there are some processes which never make progress.

File Systems

- File systems provide an abstraction of the physical disk. They allow you to store files in a structured manner on a storage device.

- The file system abstraction,

- Architecture of the OS storage stack,

- The application interacts with the system call interface which on linux provides creat, open, read, write, etc.

- In the case of modern hard drives,

- The disk controller (between device driver and physical disk) hides disk geometry and exposes a linear sequence of blocks.

- The device driver hides the device specific protocol to communicate with the disk.

- The disk scheduler takes in requests coming from different processes and schedules them to send one by one to the device driver.

- The buffer cache keeps blocks in memory to improve read and write times for frequently accessed blocks.

- The file system (FS) hides the block numbers and instead exposes the directory hierarchy, files, file permissions, etc. To do this it must know which blocks relate to which file, and which part of the file. It must also manage how to store this on the blocks of the linear address space of the disk, keeping track of which blocks are in use. See Allocation Strategies

- The virtual file system (VFS) provides an interface so that different file systems which are suitable for VFS (ie. they have the concept of files, directories, etc..) can be accessed uniformly.

- The open file table (OF table) and file descriptor table (FD table) keep track of files opened by user-level processes.

There are many popular file systems in use today. FAT16, FAT32 are good for embedded devices; Ext2, Ext3, Ext4 are designed for magnetic disks, ISO9660 is designed for CD-ROM’s, JFFS2 is optimised for flash memory as you don’t want to write to the same location too many times as it wears out. Others include NTFS, ReiserFS, XFS, HFS+, UFS2, ZFS, JFS, OCFS, Btrfs, ExFAT, UBIFS.

Performance issues with standard magnetic disks

- To access a block on a disk the head must move to the required track (seek time), then we have to wait until the required block on the track reaches the head (rotational delay). We also have some mostly fixed overhead for the data to be passed between the device and the driver (transfer time).

- Total average access time,

. Where r is the rotational speed, b is the number of bytes to transfer, N is the number of bytes per track and

is the seek time.

- Seek time is the most expensive, so we want to ensure that our disk arm scheduler gives good performance.

- First-in, First-out (no starvation)

- Shortest seek time first (good performance, but can lead to starvation)

- Elevator (SCAN) (head scans back and forth over the disk. no great starvation, sequential reads are poor in one of the directions)

- Circular SCAN (head scans over the disk in one direction only)

Block Allocation Strategies

- Contiguous Allocation

- Gives good performance for sequential operations as all the blocks for a file are together in order

- though you need to know the maximum file size at creation

- as files are deleted, free space is fragmented (external fragmentation)

- Dynamic Allocation

- Blocks allocated as needed, hence the blocks for a file could be all over the place on the disk

- need to keep track of where all the blocks are and the order for each file

External fragmentation – space wasted external to the allocated regions, this space becomes unusable as its not contiguous (so you have lots of small spaces but you need a larger space to fit a whole file in)

Internal fragmentation – space wasted internal to the allocated memory regions, eg. you get a 4K block allocated and only use 1K, but the OS can’t give the leftover 3K to someone else.

Keeping track of file blocks

- File allocation table (used for FAT16 and FAT32)

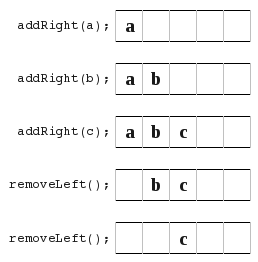

- inode based FS; keep an index node for each file which stores all the blocks of the file.

- Directories are stored as normal files but the FS gives these file special meaning.

- A directory file stores a list of directory entries, where each entry containing the file name (because Ext2/3/4 store these with the directory file, not the inode for the file), attributes and the file i-node number.

- Disk blocks (sectors) have a hardware set size, and the file system has a filesystem set block size which is sector size * 2^N, for some N. A larger N means large files need less space for the inode, but smaller blocks waste less space for lots of small files.

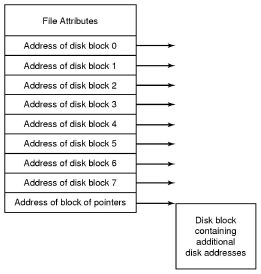

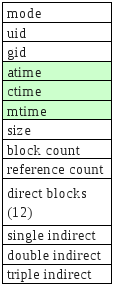

- Ext2 inodes

- The top bunch of data is file attributes. Block count is the number of disk blocks the file uses.

- The direct blocks area stores index’s for the first 12 blocks used by the file.

- The single indirect is a block numbers to a block which contains more block numbers.w

System call interface

- At the system call level a file descriptor is used to keep track of open files. The file descriptor is associated with the FS inode, a file pointer of where to read or write next and the mode the file was opened as like read-only.

- Most Unix OS’s maintain a per-process fd table with a global open file table.

VFS

- Provides a single system call interface for many file systems.

- the application can write file access code that doesn’t depend on the low level file system

- device can be hard disk, cd-rom, network drive, an interface to devices (/dev), an interface to kernel data structures (/proc)

Journaling file system keeps a journal (or log) of FS actions which allows for the FS to recover if it was interrupted when performing an action that is not atomic, but needs to be.

Memory Management and Virtual Memory

- The OS needs to keep track of physical memory, what’s in use and which process is it allocated to. It must also provide applications with a view of virtual memory that they are free to use.

- Swapping (sometimes called paging) is where memory is transferred between RAM and disk to allow for more data in memory than we have space for in physical RAM. On base-limit MMU’s swapping only allows who programs memory allocation to be swapped to disk, rather than just pages at a time as with virtual memory, hence you can’t use swapping here to allow programs larger than memory to run.

- If we are only running one program we can give it all of memory (and either run the in part of it, or in some other ROM). However many systems need more than one process to be running at the same time. Multiprogramming also means that we can be utilising the CPU even when a process is waiting on I/O (ie. give the CPU to the other process). But to support multiprogramming we need to divide memory up.

- We could divide memory up into fixed size partitions and allocate these to processes, but this creates internal fragmentation (wasted space inside the partition allocated).

- Using dynamic partitioning we give each process exactly how much it needs, though this assumes we know how much memory we need before the process is started. This approach also leads to external fragmentation where we have lots of little unallocated gaps, but these are too small to be used individually.

- Approaches to dynamic partitioning include first-fit, next-fit, best-fit and worst-fit.

- Binding addresses in programs

- Compile time

- Load time

- Run time

- Protection – we only want each process to be able to access memory assigned to that process, not other process

- Base and Limit Registers – set by the kernel on a context switch, the hardware handles the rest

- Virtual Memory – two variants

- Paging

- Partition physical memory into small equal sized chunks called frames.

- Divide each process’s virtual (logical) address space into same size chunks called pages. So a virtual memory address consists of a page number and an offset within that page.

- OS maintains a page table which stores the frame number for each allocated virtual page.

- No external fragmentation, small internal fragmentation.

- Allows sharing of memory

- Provides a nice abstraction for the programmer

- Implemented with the aid of the MMU (memory management unit).

- Segmentation

- Paging

Virtual Memory

- If a program attempts to access a memory address which is not mapped (ie. is an invalid page) a page fault is triggered by the MMU which the OS handles.

- Two kinds of page faults,

- Illegal Address (protection error) – signal or kill the process

- Page not resident – get an empty frame or load the page from disk, update the page table, and restart the faulting instruction.

- Each entry in the page table not only has the corresponding frame number but also a collection of bits like protection bits, caching disabled bit, modified bit, etc.

- Page tables are implemented as a data structure in main memory. Most processes don’t use the full 4GB address space so we need a data structure that doesn’t waste space.

- The two level page table is commonly used,

- An alternative is the inverted page table,

- The IPT grows with size of RAM, not virtual address space like the two level page table.

- Unlike two level page table which is required for each process, you only need one IPT for the whole machine. The downside is sharing pages is hard.

- Accessing the page table creates an extra overhead to memory access. A cache for page table entries is used called a translation look-aside buffer (TLB) which contains frequently used page table entries.

- TLB can be hardware or software loaded.

- TLB entries are process specific so we can either flush the TLB on a context switch or give entries an address-space ID so that we can have multiple processes entries in the TLB at the same time.

- Principle of Locality (90/10 rule)

- Temporal Locality is the principle that accesses close together in terms of time are likely to be to the same small set of resources (for instance memory locations).

- Spatial locality is the principle that subsequent memory accesses are going to be close together (in terms of their address) rather than random. array loop example

- The pages or segments required by an application in a time window (

) is called its memory working set.

- Thrashing,

- To recover from thrashing, just suspend some processes until it eases.

- Fetch policy – when should a page be brought into memory? on demand, or pre-fetch?

- Replacement policy – defines which page to evict from physical memory when its full and you start swapping to disk.

- Optimal

- FIFO – problem is that age of a page isn’t necessarily related to its usage

- Least Recently Used – works well but need to timestamp each page when referenced.

- Clock (aka. Second Chance) – an approximation of LRU. Uses a usage or reference bit in the frame table

- Resident Set Size – how many frames should each process have?

- Fixed allocation

- Variable allocation – give enough frames to result in an acceptable fault rate

Input Output

- Programmed I/O (polling or busy waiting)

- Interrupt Driven I/O

- Processor is interrupted when I/O module (controller) is ready to exchange data

- Consumes processor time because every read and write goes through the processor

- Direct Memory Access (DMA)

- Processor sent interrupt at start and end, but is free to work on other things while the device is transferring data directly to memory.

Scheduling

- The scheduler decides which task to run next. This is used in multiple contexts, multi-programmed systems (threads or processes on a ready queue), batch system (deciding which job to run next), multi-user system (which user gets privilege?).

- Application behaviour

- CPU bound – completion time largely determined by received CPU time

- I/O bound – completion time largely determined by I/O request time

- Preemptive (requires timer interrupt, ensures rouge processes can’t monopolise the system) v non-preemptive scheduling.

- Scheduling Algorithms can be categorised into three types of systems,

- Batch systems (no users waiting for the mouse to move)

- Interactive systems (users waiting for results)

- Round Robin – each process is run and if it is still running after some timeslice t, the scheduler preempts it, putting it on the end of the ready queue, and scheduling the process on the head of the ready queue.

If the timeslice is too large the system becomes sluggy and unresponsive, if it is too small too much time is wasted on doing the context switch. - The traditional UNIX scheduler uses a priority-based round robin scheduler to allow I/O bound jobs to be favoured over long-running CPU-bound jobs.

- Round Robin – each process is run and if it is still running after some timeslice t, the scheduler preempts it, putting it on the end of the ready queue, and scheduling the process on the head of the ready queue.

- Realtime systems (jobs have deadlines that must be meet)

- Realtime systems are not necessarily fast, they are predictable.

- To schedule realtime tasks we must know, its arrival time

, maximum execution time (service time), deadline (

).

- tasks could be periodic or sporadic.

- slack time is time when the CPU is not being used, we are waiting for the next task to become available.

- two scheduling algorithms,

- Rate Monotonic – priority driven where priorities are based on the period of each task. ie. the shorter the period the higher the priority

- Earliest Deadline First – guaranteed to work if set of tasks are schedulable. The earlier the deadline the higher the priority.

Multiprocessor Systems

- For this section we look at shared-memory multiprocessors.

- There are uniform memory accesses multiprocessors and non-uniform memory access multiprocessors which access some parts of memory slower than other parts. We focus on the UMA MP’s.

- “Spinlocking on a uniprocessor is useless, as another thread on the same processor needs to release it, so blocking asap is desirable. On a multiprocessor, the thread holding the lock may be presently active on another processor, and it could release the lock at any time.

On a multiprocessor, spin-locking can be worthwhile if the average time spent spinning is less than the average overheads of context switch away from, and back to, the lock requester.” - Thread affinity refers to a thread having some preferred processor to run on an a multiprocessor machine. For instance if thread A started on cpu0 it may want to be scheduled again on cpu0 rather than cpu1 as parts of the cache may still be intact.

- Multiprocessor systems have multiple ready queues, as just one ready queue would be a shared resource which introduces lock contention.

Security

Security Engineering Notes

Here are are some rough notes I put together as part of revision for a uni course.

Security Engineering

- CIA

- Confidentiality

- Integrity

- Availability

- Some other attributes include,

- Authenticity

- Privacy

- Accountability,

- Non-reputability

- Receipt-freeness (for voting systems)

- Methods of attack

- Brute Force

- Deception/Social Engineering

- Calculated attack on a vulnerability

- Insider (both intentional and unintentional/accidental)

- Mitigation techniques: Keeping logs and conducting audits could. Also least privilege to prevent accidental insider attacks.

- Chance (right place at the right time)

- Accidental

- Reliable systems (protect against random failures)

- Secure systems (protect against targeted deliberate attacks)

- Assets which need to be protected

- Tangible (eg. documents)

- Non-tangible (eg. reputation)

- Security Engineering: Protecting assets against threats.

- Kerckhoff’s Principle – “the security of the cryptosystem must not depend on keeping secret the crypto-algorithm. It must depend only on keeping secret the key.”

- Because, its often easier to change the key after a compromise or possible compromise than changing the algorithm,

- and it’s usually also harder to keep the algorithm secret (can be reverse-engineered or bought) compared to the key

Crypto

- Code (hidden meaning, ie. A spy using a phase that seems normal, but actually has some other hidden meaning)

- Plaintext -> E(encryption key) Ciphertext -> D(decryption key)

- Symmetric-key cryptography – Encryption key can be calculated from the decryption key and vice versa (usually they are the same though). The sender and receiver must agree upon the keys first. Two types,

- Stream Ciphers – operate one bit at a time (eg. RC4)

- Block Ciphers – operate on a group of bits at a time (eg. DES and AES/Rijndael)

- Block chaining is used to make each block of cipher text depend on all the previous blocks of plaintext.

- Public-key Cryptography (asymmetric-key) – public and private keys where you cannot (or is really hard to) calculate one from the other. (eg. RSA)

- One problem is that an attacker can use an adaptive chosen plaintext attack, where they encrypt a large number of messages using the target’s public key. A fix is to pad messages with a long random string.

- Can be used to do digital signatures. (encrypt your message M (though in practice a one way hash is used instead) using your private key, verifiers just decrypt using your public key as only someone with the private key (ie. you) could have successfully encrypted M)

- Birthday attack – find two messages that have the same hash but only differ by say spaces at the end of the line, and some other key part like dollar amount. Then when you get Alice to sign document A, the signature will be the same for document B (as Alice is signing the hashes and the hashes are the same)

- Public Key Infrastructure – how to ensure that Bob’s public key really belongs to the Bob you want to communicate with (tries to solve the key distribution problem).

- Public Key Certificates

- Certificate distribution (X.509, GPG)

- Most cryptosystems use public-key crypto just to exchange the symmetric key shared key, and then both parties this shared key to communicate with symmetric-key crypto. These systems are known as hybrid cryptosystems. This is done for mainly two reasons,

- public-key algorithms are usually around 1000 times slower than symmetric algorithms.

- public-key algorithms are vulnerable to chosen-plain text attacks.

- Cryptanalysis is about determining the plaintext from the key.

- Authentication

Extra notes from Schinder 2nd Ed.

- Types of attacks,

- cipher-text only

- known plain text

- chosen plain text

- adaptive chosen plain text (you can modify your next choice based on the results of the previous choice)

- chosen cipher text

- known key relationship

- rubberhose (get the person who knows the key to reveal it)

- Substitution ciphers

- Simple (monoalphabetic cipher)

- A → 2

B → 3

C → 1

…

- A → 2

- Homophonic (where multiple options available such as 5,2 one is chosen at random)

- A → 5,2

B → 1,3

C → 4

…

- A → 5,2

- Polygram

- ABC → 819

ABB → 234

ABA → 567

ACA → 587

…

- ABC → 819

- Polyalphabetic (simple substitution cipher, but the mapping also depends on the position of the input character in the plain text), examples include,

- Caesar cipher – shift each letter forward or back by K. eg. if K = 1, A → B, B → C …

- rot13

- Vigenere cipher – align the repeated key K over the plaintext

- These can be attacked by statistical cryptanalysis of the letter frequency

- Simple (monoalphabetic cipher)

- Transposition Ciphers – the order of the characters is changed (rotor machines such as the Enigma did this)

- eg. write across, but read down

HELLO WORLD THISI SSOME

encrypts to HWTSEOHSLRIOLLSMODIE

- eg. write across, but read down

- One time pads

- One-way hash function (also known as message digest, fingerprint, cryptographic checksum)

- pre-image → f(x) → hash value

- A hash function is collision free if it is hard (ie. at best becomes brute force) to generate two pre-images with the same hash value.

- Also a single bit change in the pre-image should on average change half of the bits in the hash value.

- Types of random sequences,

- Pseudo-random (looks random, good for applications like writing an random AI game agent)

- Cryptographically secure pseudo-random (unpredictable)

- True randomness (cannot be reproduced with the same inputs)

- Zero-knowledge proofs – proving to someone that you know something without revealinfg them what that something is. Two types,

- Interactive

- Non-interactive

RSA

Choose two primes p and q and let n = pq. Choose e such that e and (p – 1)(q – 1) are relatively prime (ie. no common factor and both prime numbers). Let d be a solution of ed = 1 mod (p-1)(q-1). Public key , private key

.

Access Control

- “An access control policy is a description of who may access what (and how).”

- Principle of least privilege – “The powers that an agent in the system is given should be the minimum that they need to have to play their assigned role in the system.”

- AC can be done at many levels (eg. hardware, os, database, network, application…)

- Managing Subjects, Objects and Operations. (can use Groups or Roles to classify subjects)

- Access Control Matrix (central database of all objects, subjects and operations permitted)

- Access Control List (store permissions with object)

- Capabilities (store permissions with subject)

- Mandatory AC – central entity sets the AC.

- Multi-level (eg. top secret, secret, unclassified labels…)

- Bell-LaPadula – no read up, no write down.

- Multi-level (eg. top secret, secret, unclassified labels…)

- Discretionary – each object has an owner, and the owner sets the AC.

- Commercial Policies

- Chinese Wall – eg set up rules like if a subject can access X they cannot access Y.

- Dual Control – need two people together to modify X, they cannot do it on their own.

- Reference Monitor – some entity that sits between the subjects and objects to implement some policy (relies on trusted entity)

Elections

You want your elections to be,

- verifiable – so the voter can check that their vote was indeed counted (and if possible check that everyone else’s vote was counted correctly)

- correct – votes are counted correctly and the results match calculated correctly

- secret (ie. no one knows how you vote, so that you cannot be coerced)

- coercion free (so you want to be recite free)

(additional notes, but don’t really need to know for exam)

- True Democracy (like the Greek’s assembly)

- Representative Democracy (where we vote in someone to represent us)

- Participatory Democracy (where we represent ourself an all have equal say)

- Australian Federal Elections are subject to the Mafia problem. Because we have preferential voting that is you can order your preferences that means that for n candidates there are n! ways of voting. So if there are enough candidates and few enough people you can coerce a person into using a specific voting pattern which you can then verify that they indeed did vote that way.

- An alternative attack would be to obtain a blank voting paper, fill it in the way you want it to be give it to someone and tell them to submit it and return you a blank one. Although the attacker won’t know if the victim has scribbled it out to make it invalid, but at least the attacker has prevented someone from voting.

Security Architecture

Security Design Principles:

- Economy of Mechanism – keep things simple

- Fail-safe defaults

- Complete Mediation – every access request checked

- Open design, ie. Kerckhoffs’ Principle: Keep the key secret, don’t rely on keeping the algorithm secret.

- Separation of Privilege

- Least Privilege

- Least common mechanism

- Psychological acceptability

Defence in depth – use many layers of security (or many security perimeters in layers)

Human Factors

- People are trusting

- People are lazy (to read the manual or change factory defaults)

- People are greedy (will exploit the system if given the chance)

- People are forgetful (forget to revoke access when terminating employees)

- People are selfish

- People are stick-beaks (eg. Medicare employees accessing client data for personal interest)

Some strategies for reducing the risk,

- logging of insider’s actions

- honeypots for insiders (eg. fake web server running on the network, or fake data in the database)

- security policy for staff to follow

Risk

Risk is not something I would have thought to be in a security course, but now that I think about it there are few if any bullet proof systems, so there is always some risk.

Whether it be secure communication (there is always some risk that an eavesdropper cracks your cryto and reads the message, so its important to weigh up those risks to decide if its worth sending the message in the first place), or be it running a web server (you cannot be sure that your web server doesn’t have bugs, or even if you have verified the code to be matching the vulnerability free specification other things can happen like, has your CPU been verified to be bug free, are you sure that a cosmic ray won’t flip a bit and subsequently create a vulnerability). So weighing up the risks involved is important to decide how much time and effort you devote to securing a system, or how the system is designed to work.

Business Risk Concepts

- Exposure – what is the worst case scenario (or loss)

- Volatility – how predictable is the loss

- Probability – how likely is that loss

Degree of Risk

<-- Probability --> High Exposure/ | High Exposure/ /\ Low Probability | High Probability || ----------------------------------- Exposure Low Exposure/ | Low Exposure/ || Low Probability | High Probability \/

Exposure – how widespread is the system?

Probability – how easy is it to exploit the system, and how great is the incentive to do so (which relates to how valuable the assets you are protecting are)?

Risk management

- Example. The risk of viruses; the mitigation strategy may be to use anti-virus detection, but the residual risk is zero-day attacks.

- Carry the risk – just accept it.

- Balancing the risk – eg. data backups at multiple locations, distributed servers at multiple locations (perhaps even running different systems, so for instance if you want to keep your web site up and running you may load balance between both a Linux box running Apache, and a windows box running IIS, so that an attack found in one is not likely to be present in the other so the attacker can’t take both offline with the one attack.)

- Mitigate it – reduce the risk

- Transfer the risk – eg. in finance, get insurance

Computer Graphics Notes

Not really complete…

Colour notes here, transformations notes here.

Parametric Curves and Surfaces

Parametric Representation

eg.

Continuity

Parametric Continuity

- If the first derivative of a curve is continuous, we say it has C1 continuity.

Geometric Continuity

- If the magnitude of the first derivative of a curve changes but the direction doesn’t then, we say it has G1 continuity.

- Curves need G2 continuity in order for a car to drive along them. (ie. not instantly change steering wheel angle at any points).

Control Points

Control points allow us to shape/define curves visually. A curve will either interpolate or approximate control points.

Natural Cubic Splines

- Interpolate control points.

- A cubic curve between each pair of control points

- Four unknowns,

-

- interpolating the two control points gives two,

- requiring that derivatives match at end of points of these curves gives the other two.

-

- Moving one control point changes the whole curve (ie. no local control over the shape of the curve)

Bezier Curve

This Bezier curve shown has two segments, where each segment is defined by 4 control points. The curve interpolates two points and approximates the other two. The curve is defined by a Bernstein polynomial. In the diagram changing points 1 and 2 only affects that segment. Changing the corner points (0 and 3) each only affect the two segments that they boarder.

Some properties of Bezier Curves:

- Tangent Property. Tangent at point 0 is line 0 to 1, similarly for point 3.

- Convex Hull Property. The curve lies inside the convex hull of the control points. (The corollary of this is if the control points are colinear, the curve is a line.)

- They have affine invariance.

- Can’t fluctuate more than their control polygon does.

- Bezier’s are a special case of B-spline curves.

We can join two Bezier curves together to have C1 continuity (where B1(P0, P1, P2, P3) and B2(P0, P1, P2, P3)) if P3 – P2 = P4 – P3. That is P2, P3, and P4 are colinear and P3 is the midpoint of P2 and P4. To get G1 continuity we just need P2, P3, and P4 to be colinear. If we have G1 continuity but not C1 continuity the curve still won’t have any corners but you will notice a “corner” if your using the curve for something else such as some cases in animation. [Also if the curve defined a road without G1 continuity there would be points where you must change the steering wheel from one rotation to another instantly in order to stay on the path.]

De Casteljau Algorithm

De Casteljau Algorithm is a recursive method to evaluate points on a Bezier curve.

To calculate the point halfway on the curve, that is t = 0.5 using De Casteljau’s algorithm we (as shown above) find the midpoints on each of the lines shown in green, then join the midpoints of the lines shown in red, then the midpoint of the resulting line is a point on the curve. To find the points for different values of t, just use that ratio to split the lines instead of using the midpoints. Also note that we have actually split the Bezier curve into two. The first defined by P0, P01, P012, P0123 and the second by P0123, P123, P23, P3.

Curvature

The curvature of a circle is .

The curvature of a curve at any point is the curvature of the osculating circle at that point. The osculating circle for a point on a curve is the circle that “just touches” the curve at that point. The curvature of a curve corresponds to the position of the steering wheel of a car going around that curve.

Uniform B Splines

Join with C2 continuity.

Any of the B splines don’t interpolate any points, just approximate the control points.

Non-Uniform B Splines

Only invariant under affine transformations, not projective transformations.

Rational B Splines

Rational means that they are invariant under projective and affine transformations.

NURBS

Non-Uniform Rational B Splines

Can be used to model any of the conic sections (circle, ellipse, hyperbola)

=====================

3D

When rotating about an axis in OpenGL you can use the right hand rule to determine the + direction (thumb points in axis, finger indicate + rotation direction).

We can think of transformations as changing the coordinate system, where (u, v, n) is the new basis and O is the origin.

This kind of transformation in is known as a local to world transform. This is useful for defining objects which are made up of many smaller objects. It also means to transform the object we just have to change the local to world transform instead of changing the coordinates of each individual vertex. A series of local to world transformations on objects builds up a scene graph, useful for drawing a scene with many distinct models.

Matrix Stacks

OpenGL has MODELVIEW, PROJECTION, VIEWPORT, and TEXTURE matrix modes.

- glLoadIdentity() – puts the Identity matrix on the top of the stack

- glPushMatrix() – copies the top of the matrix stack and puts it on top

- glPopMatrix()

For MODELVIEW operations include glTranslate, glScaled, glRotated… These are post multiplied to the top of the stack, so the last call is done first (ie. a glTranslate then glScaled will scale then translate.).

Any glVertex() called have the value transformed by matrix on the top of the MODELVIEW stack.

Usually the hardware only supports projection and viewport stacks of size 2, whereas the modelview stack should have at least a size of 32.

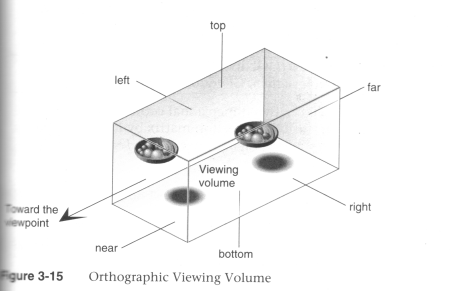

The View Volume

Can set the view volume using,(after setting the the current matrix stack to the PROJECTION stack

- glOrtho(left, right, bottom, top, near, far)

(Source: Unknown) - glFrustum(left, right, bottom, top, near, far)

(Source: Unknown) - gluPerspective(fovy, aspect, zNear, zFar)

(Source: Unknown)

In OpenGL the projection method just determines how to squish the 3D space into the canonical view volume.

Then you can set the direction using gluLookAt (after calling one of the above) where you set the eye location, a forward look at vector and an up vector.

When using perspective the view volume will be a frustum, but this is more complicated to clip against than a cube. So we convert the view volume into the canonical view volume which is just a transformation to make the view volume a cube at 0,0,0 of width 2. Yes this introduces distortion but this will be compensated by the final window to viewport transformation.

Remember we can set the viewport with glViewport(left, bottom, width, height) where x and y are a location in the screen (I think this means window, but also this stuff is probably older that modern window management so I’m not worrying about the details here.)

Visible Surface Determination (Hidden Surface Removal)

First clip to the view volume then do back face culling.

Could just sort the polygons and draw the ones further away first (painter’s algorithm/depth sorting). But this fails for those three overlapping triangles.

Can fix by splitting the polygons.

BSP (Binary Space Partitioning)

For each polygon there is a region in front and a region behind the polygon. Keep subdividing the space for all the polygons.

Can then use this BSP tree to draw.

void drawBSP(BSPTree m, Point myPos{

if (m.poly.inFront(myPos)) {

drawBSP(m.behind, myPos);

draw(m.poly);

drawBSP(m.front, myPos);

}else{

drawBSP(m.front, myPos);

draw(m.poly);

drawBSP(m.behind, myPos);

}

}

If one polygon’s plane cuts another polygon, need to split the polygon.

You get different tree structures depending on the order you select the polygons. This does not matter, but some orders will give a more efficient result.

Building the BSP tree is slow, but it does not need to be recalculated when the viewer moves around. We would need to recalculate the tree if the polygons move or new ones are added.

BSP trees are not so common anymore, instead the Z buffer is used.

Z Buffer

Before we fill in a pixel into the framebuffer, we check the z buffer and only fill that pixel is the z value (can be a pseudo-depth) is less (large values for further away) than the one in the z buffer. If we fill then we must also update the z buffer value for that pixel.

Try to use the full range of values for each pixel element in the z buffer.

To use in OpenGL just do gl.glEnable(GL.GL_DEPTH_TEST) and to clear the z-buffer use gl.glClear(GL.GL_DEPTH_BUFFER_BIT).

Fractals

L-Systems

Line systems. eg. koch curve

Self-similarity

- Exact (eg. sierpinski trangle)

- Stochastic (eg. mandelbrot set)

IFS – Iterated Function System

================================================

Shading Models

There are two main types of rendering that we cover,

- polygon rendering

- ray tracing

Polygon rendering is used to apply illumination models to polygons, whereas ray tracing applies to arbitrary geometrical objects. Ray tracing is more accurate, whereas polygon rendering does a lot of fudging to get things to look real, but polygon rendering is much faster than ray tracing.

- With polygon rendering we must approximate NURBS into polygons, with ray tracing we don’t need to, hence we can get perfectly smooth surfaces.

- Much of the light that illuminates a scene is indirect light (meaning it has not come directly from the light source). In polygon rendering we fudge this using ambient light. Global illumination models (such as ray tracing, radiosity) deal with this indirect light.

- When rendering we assume that objects have material properties which we denote k(property).

- We are trying to determine I which is the colour to draw on the screen.

We start with a simple model and build up,

Lets assume each object has a defined colour. Hence our illumination model is , very simple, looks unrealistic.

Now we add ambient light into the scene. Ambient Light is indirect light (ie. did not come straight from the light source) but rather it has reflected off other objects (from diffuse reflection). . We will just assume that all parts of our object have the same amount of ambient light illuminating them for this model.

Next we use the diffuse illumination model to add shading based on light sources. This works well for non-reflective surfaces (matte, not shiny) as we assume that light reflected off the object is equally reflected in every direction.

Lambert’s Law

“intensity of light reflected from a surface is proportional to the cosine of the angle between L (vector to light source) and N(normal at the point).”

Gouraud Shading

Use normals at each vertex to calculate the colour of that vertex (if we don’t have them, we can calculate them from the polygon normals for each face). Do for each vertex in the polygon and interpolate the colour to fill the polygon. The vertex normals address the common issue that our polygon surface is just an approximation of a curved surface.

To use gouraud shading in OpenGL use glShadeModel(GL_SMOOTH). But we also need to define the vertex normals with glNormal3f() (which will be set to any glVertex that you specify after calling glNormal).

Highlights don’t look realistic as you are only sampling at every vertex.

Interpolated shading is the same, but we use the polygon normal as the normal for each vertex, rather than the vertex normal.

Phong Shading

Like gouraud, but you interpolate the normals and then apply the illumination equation for each pixel.

This gives much nicer highlights without needing to increase the number of polygons, as you are sampling at every pixel.

Phong Illumination Model

Diffuse reflection and specular reflection.

- Components of the Phong Model (Brad Smith, http://commons.wikimedia.org/wiki/File:Phong_components_version_4.png)

(Source: COMP3421, Lecture Slides.)

n is the Phong exponent and determines how shiny the material (the larger n the smaller the highlight circle).

Flat shading. Can do smooth shading with some interpolation.

If you don’t have vertex normals, you can interpolate it using the face normals of the surrounding faces.

Gouraud interpolates the colour, phong interpolates the normals.

Attenuation

inverse square is physically correct, but looks wrong because real lights are not single points as we usually use in describing a scene, and

For now I assume that all polygons are triangles. We can store the normal per polygon. This will reneder this polygon, but most of the time the polygon model is just an approximation of some smooth surface, so what we really want to do is use vertex normals and interpolate them for the polygon.

Ray Tracing

For each pixel on the screen shoot out a ray and bounce it around the scene. The same as shooting rays from the light sources, but only very few would make it into the camera so its not very efficient.

Each object in the scene must provide an intersection(Line2D) function and a normal (Point3D) function

Ray Tree

Nodes are intersections of a light ray with an object. Can branch intersections for reflected/refracted rays. The primary ray is the original ray and the others are secondary rays.

Shadows

Can do them using ray tracing, or can use shadow maps along with the Z buffer. The key to shadow maps is to render the scene from the light’s perspective and save the depths in the Z buffer. Then can compare this Z value to the transformed Z value of a candidate pixel.

==============

Rasterisation

Line Drawing

DDA

- You iterate over x or y, and calculate the other coordinate using the line equation (and rounding it).

- If the gradient of the line is > 1 we must iterate over y otherwise iterate over x. Otherwise we would have gaps in the line.

- Also need to check if x1 is > or < x2 or equal and have different cases for these.

Bresenham

- Only uses integer calcs and no multiplications so its much faster than DDA.

- We define an algorithm for the 1st octant and deal with the other octant’s with cases.

- We start with the first pixel being the lower left end point. From there there are only two possible pixels that we would need to fill. The one to the right or the one to the top right. Bresenham’s algorithm gives a rule for which pixel to go to. We only need to do this incrementally so we can just keep working out which pixel to go to next.

- The idea is we accumulate an error and when that exceeds a certain amount we go up right, then clear the error, other wise we add to the error and go right.

We use Bresenham’s algorithm for drawing lines this is just doing linear interpolation, so we can use Bresenham’s algorithm for other tasks that need linear interpolation.

Polygon Filling

Scan line Algorithm

The Active Edge List (AEL) is initially empty and the Inactive Edge List (IEL) initially contains all the edges. As the scanline crosses an edge it is moved from the IEL to the AEL, then after the scanline no longer crosses that edge it is removed from the AEL.

To fill the scanline,

- On the left edge, round up to the nearest integer, with round(n) = n if n is an integer.

- On the right edge, round down to the nearest integer, but with round(n) = n-1 if n is an integer.

Its really easy to fill a triangle, so an alternative is to split the polygon into triangles and just fill the triangles.

===============

Anti-Aliasing

Ideally a pixel’s colour should be the area of the polygon that falls inside that pixel (and is on top of other polygons on that pixel) times the average colour of the polygon in that pixel region then multiply with any other resulting pixel colours that you get from other polygons in that pixel that’s not on top of any other polygon on that pixel.

Aliasing Problems

- Small objects that fall between the centre of two adjacent pixels are missed by aliasing. Anti-aliasing would fix this by shading the pixels a gray rather than full black if the polygon filled the whole pixel.

- Edges look rough (“the jaggies”).

- Textures disintegrate in the distance

- Other non-graphics problems.

Anti-Aliasing

In order to really understand this anti-aliasing stuff I think you need some basic understanding of how a standard scene is drawn. When using a polygon rendering method (such as is done with most real time 3D), you have a framebuffer which is just an area of memory that stores the RGB values of each pixel. Initially this framebuffer is filled with the background colour, then polygons are drawn on top. If your rending engine uses some kind of hidden surface removal it will ensure that the things that should be on top are actually drawn on top.

Using the example shown (idea from http://cgi.cse.unsw.edu.au/~cs3421/wordpress/2009/09/24/week-10-tutorial/#more-60), and using the rule that if a sample falls exactly on the edge of two polygons, we take the pixel is only filled if it is a top edge of the polygon.

No Anti-Aliasing

With no anti-aliasing we just draw the pixel as the colour of the polygon that takes up the most area in the pixel.

Pre-Filtering

- We only know what colours came before this pixel, and we don’t know if anything will be drawn on top.

- We take a weighted (based on the ratio of how much of the pixel the polygon covers) averages along the way. For example if the pixel was filled with half green, then another half red, the final anti-aliased colour of that pixel would determined by,

Green (0, 1, 0) averaged with red (1, 0, 0) which is (0.5, 0.5, 0). If we had any more colours we would then average (0.5, 0.5, 0) with the next one, and so on. - Remember weighted averages,

where you are averagingand

with weights

and

respectively.

- Pre-filtering is designed to work with polygon rendering because you need to know the ratio which by nature a tracer doesn’t know (because it just takes samples), nor does it know which polygons fall in a given pixel (again because ray tracers just take samples).

- Pre-filtering works very well for anti-aliasing lines, and other vector graphics.

Post-Filtering

- Post-filtering uses supersampling.

- We take some samples (can jitter (stochastic sampling) them, but this only really helps when you have vertical or horizontal lines moving vertically or horizontally across a pixel, eg. with vector graphics)

of the samples are Green, and

are red. So we use this to take an average to get the final pixel colour of

- We can weight these samples (usually centre sample has more weight). The method we use for deciding the weights is called the filter. (equal weights is called the box filter)

- Because we have to store all the colour values for the pixel we use more memory than with pre-filtering (but don’t need to calculate the area ratio).

- Works for either polygon rendering or ray tracing.

Can use adaptive supersampling. If it looks like a region is just one colour, don’t bother supersampling that region.

OpenGL

Often the graphics card will take over and do supersamling for you (full scene anti aliasing).

To get OpenGL to anti-alias lines you need to first tell it to calculate alpha for each pixel (ie. the ratio of non-filled to filled area of the pixel) using, glEnable(GL_LINE_SMOOTH) and then enable alpha blending to apply this when drawing using,

glEnable(GL_BLEND); glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

You can do post-filtering using the accumulation buffer (which is like the framebuffer but will apply averages of the pixels), and jittering the camera for a few times using accPerspective.

Anti-Aliasing Textures

A texel is a texture pixel whereas a pixel in this context refers to a pixel in the final rendered image.

When magnifying the image can use bilinear filtering (linear interpolation) to fill the gaps.

Mip Mapping

Storing scaled down images and choose closes and also interpolate between levels where needed. Called trilinear filtering.

Rip Mapping helps with non uniform scaling of textures. Anisotropic filtering is more general and deals with any non-linear transformation applied to the texture

Double Buffering

We can animate graphics by simply changing the framebuffer, however if we start changing the framebuffer and we cannot change it faster than the rate the screen will display the contents of the frame buffer, it gets drawn when we have only changed part of the framebuffer. To prevent this, we render the image to an off screen buffer and when we finish we tell the hardware to switch buffers.

Can do on-demand rendering (only refill framebuffer when need to) or continuois rendeing (draw method is called at a fixed rate and the image is redrawn regardless of whether the image needs to be updated.)

LOD

Mip Mapping for models. Can have some low poly models that we use when far away, and use the high res ones when close up.

Animation

Key-frames and tween between them to fill up the frames.

===============

Shaders

OpenGL 2.0 using GLSL will let us implement out own programs for parts of the graphics pipeline particularly the vertex transformation stage and fragment texturing and colouring stage.

Fragments are like pixels except they may not appear on the screen if they are discarded by the Z-buffer.

Vertex Shaders

- position tranformation and projection (set gl_Position), and

- lighting calculation (set gl_FrontColor)

Fragment Shaders

- interpolate vertex colours for each fragment

- apply textures

- etc.

set gl_FragColor.

Human Computer Interaction Notes

These notes are based around my COMP3511 course.

Interaction Design (+Scenarios)

- Interaction Design is about creating user experiences that enhance and augment the way people work, communicate, and interact.1

- Interaction Design has a much wider scope than Human Computer Interaction. ID is concerned with the theory and practice of designing user experiences for any technology or system, whereas HCI has traditionally been focused on/surrounding humans and computers specifically.

- Interaction Design involves understanding the requirements.

- Requirements can be functional (what should it do) or non-functional (what are the constraints). The usability principles (heuristics) fit into the non-functional requirements.

- User Profiles are a set of persona’s. A persona is a short description of a fictional user.

- Scenarios

- Activity Scenario (narrative based on user’s requirements)

- Used at the beginning of the design process.

- Who, When, What, Why

- Not technical/no specific details (re: does not presuppose the interface)

- From the users perspective

- Use Case Scenario (narrative of how the user uses the interface to fulfil their goal)

- Include the users goals but focus on the user/computer interaction (re: talk about the technology)

- Basically is a description of the use case diagram.

- Do these scenarios after you figure out the requirements

- Different users would have different use cases, we can show this with a use case diagram which shows the actors and the various use case’s that they encounter.

- Task Scenario

- Used when running a usability test. ie. give the participant a scenario before giving them a task to give them context.

- When describing a scenario, give the users goals, their context and situation. Use a narrative form.

- Activity Scenario (narrative based on user’s requirements)

Cooper et al. describe the process of interaction design as,

- Identifying needs and establishing requirements for the user experience.

- Developing alternative designs that meet those requirements.

- Building interactive versions of the designs so that they can be communicated and assessed.

- Evaluating what is being built throughout the process and the user experience it offers.

Scenarios are narratives about named people with an age. We need some background to understand where they are coming from (for instance their cultural background (eg. the US uses MM/DD/YYYY but Australia uses DD/MM/YYYY)). We try to remove incorrect assumptions about what we think a certain group of people are like. The scenario should explain their motivations and their goals.

Usability

Usability is all about producing things which are usable. Where something is usable when it meets these usability goals, however you should work out which goals are most important for the problem and focus on those first.

Usability Goals

- easy to learn

- easy to remember how to use

- effective to use

- efficient to use

- safe to use

- have good utility (providing the right kind of functionality to allow the user to achieve their goal)

User Experience Goals

- satisfying

- fun

- helpful

- motivating

- universal access (accessibility)

Heuristics (Usability Principles)

- visibility of system status (eg. busy mouse icon)

- match between system and real world (includes interface metaphors. eg. files and folders concept, “speak the user’s language” (avoid gargon that users may not understand))

- user control and freedom (includes letting the user cancel/exit. eg. can pause/cancel file transfers)

- consistency and standards (eg. consistent terminology, consistent workflows, common look and feel, GUI toolkits. eg. GNOME/GTK+)

- help and documentation

- help users recognise, diagnose and recover from errors (tell users what the problem was, why it happened and some possible solutions, using plain English. eg. recover from trash)

- error prevention (eg. move to trash first)

- recognition rather than recall (GUI applications menu as opposed to CLI)

- flexibility and efficiency of use (eg. keyboard shortcuts (helpful for experts, but hidden from novices))

- aesthetic and minimalist (uncluttered) design (maybe put rarely used info into a help page/manual rather than in the interface)

Design Principles

- visibility

- feedback (can be audio, visual…)

- affordances (clues on how to use. eg. ⋮∶affords grabable)

- consistency (includes look and feel consistency)

- mapping

- eg. which light switch controls which light

- constraints

- logical (eg. grey out menu options that are not allowed)

- physical (eg. you can’t plug a USB cable into a VGA port, c.f. you can put a DVD in a CD player)

- cultural

When designing a system we need to consider,

- who are the users,

- how will the product be used,

- where will the product be used

Identifying Needs

Requirements

When testing an interface with users/test participants, give them a high level goal and observe how they go about doing it. Don’t give them specific instructions.

Use Scenario 1: For each task identified (or major tasks, or particularly special tasks if many tasks are defined), write a description of how that user would accomplish the task independent of how they would complete it within the application.

Use Case 1: If a use scenario has been implemented, include a matching use case which describes how the task use scenario can be completed in the application. There may be branching or multiple ways to complete the task, and this is a good way to document it.

To test if something is a requirement just ask, “If I remove this, will the product still fulfil its purpose?”

Design Conceptualisation

A conceptual model is a high-level description of how a system is organised and operates. –Johnson and Henderson, 2002, p. 26

I like to think of it as this. The person coding the web browsers understands that when the users types in a URL and presses enter an HTTP GET request is sent and the response is received and the HTML is processed and displayed. There are many technical interactions and details that are happening here. But the conceptual model of this is what the average non-technical uses thinks is happening. They just have some kind of model in their head that they type the URL hit enter and get the web site displayed. Its just an abstraction of what is going on.

Interface metaphors are used as they can help the user understand and determine how an interface works. We try to use them for this purpose but just using the metaphor directly can have some negative affects (eg. if your designing a radio application for desktop PC’s, it may not be a good idea to just show an image of a real radio as the UI). We don’t want to use the metaphor to an extent that it breaks the design principles.

A classic example of a conceptual framework is that of the relation between the design of a conceptual model and the user’s understanding of it. In this framework there are three components, (Sharp et al., 2006)

- The designer’s model – the model the designer has how how the system works (or how it should work)

- The system image – how the systems actual workings are portrayed to the users through the interface, manuals, etc. (or how it is presented)

- The user’s model – how the user understands the system works (or how it is understood)

The designers job is to create the system image so that the users will invoke the same conceptual model as the designer’s.

- “A good conceptual model allows us to predict the effects of our actions.” (Norman, 1988)

The interface could be made more transparent so the user can see the details of how the system works, but this is not always desirable as it may cause confusion. Also many users may not want to know all the gory details, nor should they have to know the actual implementation in order to use the system.

- You can conceptualise how a user interacts with a system in terms of their goals and what they need to do to achieve those goals.

- You can try to give the user a more correct mental model of the system by giving useful feedback based on their input and providing help and documentation.

Prototyping

- Can do low fidelity or high fidelity prototypes.

- Could use paper mockups of screens, storyboards, electronic mockup, electronic prototype…

- Make sure you iterate.

- “the best way to get a good idea is to get lots of ideas” –Linus Pauling

Using A Design Diary

- Brainstorm ideas

- Sketch interface mockups

- Sketch storyboards/work flow diagrams

Wireframes

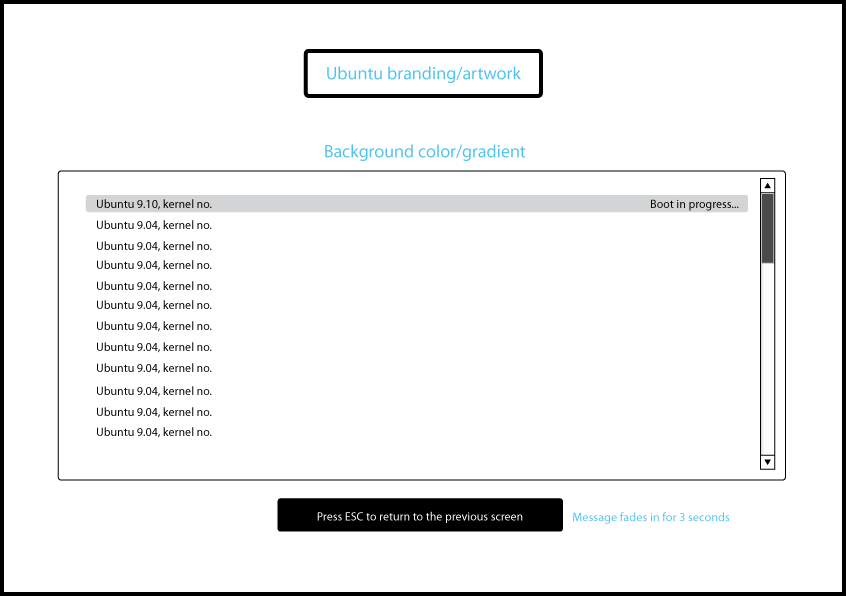

Here is an example wireframe.

Example Wireframe from https://wiki.ubuntu.com/DesktopExperienceTeam/KarmicBootExperienceDesignSpec

Another paper prototype with a slightly higher fidelity.

An example paper prototype (from https://wiki.ubuntu.com/SoftwareStore).

Issues Table

- In this course we list the issues vertically and the participants horizontally.

- Prioritise and group the issues. (Maybe use affinity diagramming for the grouping)

Usability Testing

- Can do interviews, questionnaires, usability tests (best to run a dry run of these before you start testing on many people), interaction logging…

- The purpose of a usability test is to gather feedback from potential users about usability issues as well as ensuring that an interface can be used and works as expected.

- Testing should be done throughout the whole process during prototyping, beta versions, and deployed applications.

- According to Nielson you only need test an interface with 5 people to find 80% of the issues (see Nielsen, Usability Engineering, p173) (however to publish research 5 is statistically too small so you should use at least 10).

- When planning a test you need to define scenarios and tasks as well as deciding what to test and how to collect the results. Its a good idea to have goals that try to measure the success of the usability principles.

- Test the parts of your interface which would be used most, as well as any particularly difficult to design aspects first.

There are some legal and ethical issues to consider. The participant,

- needs to sign a consent form for you to run a test with them.

- is free to stop participating at any time.

- must be made aware of how you are observing them and what will be done with data collected. eg. is the session been recorded on video, audio, observed by people, screen captured…? Will the data be antonymous, will the anonymous results be published…

- should be made aware that you are testing the software, not them.

During a Usability Test,

- Avoid leading questions. (eg. try to avoid “How much do you like this interface?”)

- When running a usability test be careful not to bias your results. For example instead of asking the user “How would do X? when there is a button “Do X”, give them a scenario which has a goal and ask them how they would go about achieving this with the interface.

- You want the participant to “think aloud” so that you know what they are thinking when they are using the interface you are testing.

- If users are struggling give them a hint, if that doesn’t help explain the expected solution and move on, but note that they needed assistance when recording the test data.

- If a task is difficult for the user, its not the users fault, its the interface’s!

- We want to record things like time to complete the task, amount and nature of errors encountered and by who… Things that address the usability principles. You should record both these quantitative measurements and any qualitative things that you observer or the participant mentions.

After the Testing,

- Collate feedback and test data (Use an issues table to record the usability issues that the participants had.)

- Group issues and prioritise them.

- When analysing results consider,

- If a user is asked to compare two interfaces, the results may bias towards the second as they learn from their first experience.

- Can try to solve this by getting some participants to do A then B, and others B then A.

- Observing how a user interacts with an interface may change how they interact with it. (ie. they may have done things differently if they were at home, without you scrutinising their every move).

- We can try to avoid this by making the participants feel comfortable and reinforcing that we are not testing them we are testing the interface. Assure them that there are no incorrect users and don’t avoid doing things just because you know we are taking notes.

- If a user is asked to compare two interfaces, the results may bias towards the second as they learn from their first experience.

Usability Testing

When actually running a usability test you should follow a usability test plan. The test plan just details what the test facilitator should do during the test.

The usability testing procedures we used in this course are:

- Explain procedures (think aloud, data collection methods in use…)

- Make sure they agree and sign a consent for before proceeding (you keep one, they keep one)

- Run a pre-test questionnaire (used to generate a participant profile) (this helps to give you an idea on their experience level, as well as any background they may have in using similar interfaces before, as these affect how the participant performs) (best to get the participant to do this a few days before the test so that you can focus on specific tasks.)

- Introduce scenario

- Run through tasks

- Ask any post test questions

- Do they have any extra comments/debriefing

- Thank them for their time

Interviews

- Can be open ended (participant gives longer responses which may include their reasoning) or closed (list of options participant chooses from).

- When running an interview give,

- An introduction to the interview (what you are doing, purpose, what happens to the responses, how it it being recorded)

- Warm-up questions

- Main section. (use a logical sequence)

- Cool-off questions

- Closing remarks.

Questionnaires

- Participant Sample (You probably want a sample representative of your users/target users).

User Centred Design Process

The UCD process is all about focusing on the users and tasks. It also means iterate your designs often. The development is driven by users needs rather than technical concerns.

More specifically Gould and Lewis (1985) give three principles,

- Early focus on users and tasks.

- Empirical measurement.

- Iterative design.

Affinity Diagramming

- This is where we collect ideas and then group them.

- Don’t use pre-defined groups, make them up as you start sifting through the ideas.

- The idea is to organise a bunch of individual ideas into a hierarchy.

Card Sorting

- Get a bunch of people to sort cards with some idea/concept/whatever into categories and then compare how they were sorted by the different participants.

Software Lifecycles

- Waterfall

- W-model

- Spiral

- Rapid Application Development

- Star Model (evaluation at each step)

- ISO 13407 (the HCI model goes around in a circle and only exits when satisfactory)

Cognitive Load Theory

Cognition is what goes on in our brains. It includes cognitive processes such as,

- attention

- Some techniques are particularly good at grabbing attention (flashing, moving, colourful or large things). But these should be used sparingly.

- perception and recognition

- Gestalt principles of perceptual organisation. ie. we group things by proximity, similarity, closure, symmetry and continuity.

Gestalt principles of perceptual grouping. (a. you see two groups the left two and the right two; b. you see the objects in columns rather than rows; c. you group the () braces together; d. you see two curves and most people probably see the same two curves (as opposed to sharp edges that meet at the vertex))

- Gestalt principles of perceptual organisation. ie. we group things by proximity, similarity, closure, symmetry and continuity.

- memory

- learning

- reading, speaking and listening

- problem-solving, planning, reasoning and decision-making.

- automatic processes

- Stroop effect (trying to say the colour not the words eg. red green blue orange purple pink) is due to this.

Some Cognitive Load Theory

- Huge long term memory (with the information stored in schemas) and a limited working memory.

- Schemas allow us to bypass our working memory limitations by chunking information.

- They allow us to ignore the huge amount of detail coming from our senses and instead integrate with our existing schemas.

- eg. its much easier to memories SYSTEM than YSMSTE.

- “Automated Schemas”

- Worked Examples instead of Means-Ends Analysis

- Its better to give new users a quick (or even not so quick) ‘worked example’ of how the interface works/how to use it, than just let them work it out for themselves.

- Split Attention Effect

- e.g. “See figure 16.”, “Refer to page 26″… “Requires us to mentally integrate information that is physically split.”2

- Solution physically integrate the material.

- Don’t force users to recall information from a previous screen.

- The Redundancy Effect

- It is better to emit redundant information as it generally just confuses people.

- Expertise Reversal Effect

- Its better to assume your audience is novice, if you are unsure.

- Novices need lots of worked out examples

- Reduce Search (reduces cognitive load)

- By using a consistent layout

- By reducing visual clutter

- Diagrams can reduce cognitive load

- Modality Effect

- Separate working memories for audio and visual senses.

- Therefore presenting information visually and auditory allows for a greater total working memory size than just presenting it visually or auditory. (But we should consider users with disabilities, so taking advantage of this by presenting some information visually and some auditory is not a good idea)

Some HCI Applications

- The Training Wheels approach involves restricting the features of an interface for novices until they become more experienced when advanced features are enabled.

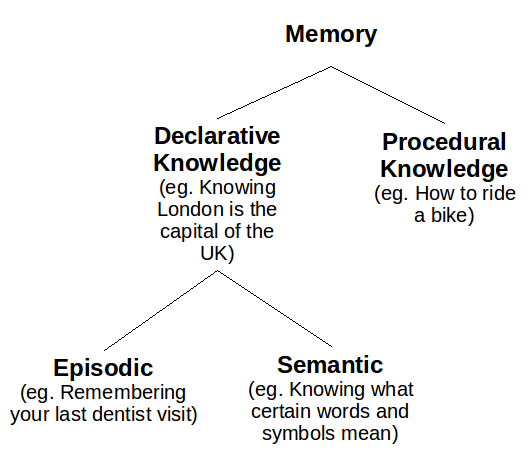

Memory

(From a psychologists perspective).

- Memory is based on your context (eg. night, bed, tired, dark, dream… ask them to recall they will often recall sleep even though it was never mentioned). Give context before this will help them store the information and recall it better.

- Miller’s theory is that only 7± 2 chunks of information can be held in short-term memory at any one time. (But this doesn’t mean say, only put seven items in any menu or something like that. This is only for short-term memory and when the information comes and goes, not when it can be rescanned.)

Long Term Memory

Explicit and Implicit Memory

“Imagine that you learn a list of items and are then required to recall or recognise them. This memory test would be accompanied by conscious awareness that you were remembering. Imagine that a considerable time later, a test of recall or recognition revealed no memory for the items. However if you were given the original list to relearn there would probably be some savings in learning time (i.e. you would take less time to learn the list the second time, oven though you were not aware of your memory of the items). This is the distinction between explicit memory, in which remembering is accompanied by either intentional or involuntary awareness of remembering, and implicit memory, in which remembering is not accompanied by awareness (Graf & Schacter 1985; Schacter 1987).” — (Walker, “Chapter 9: Memory, Reasoning and Problem Solving”. pg. 262 (sorry I don’t have the title))

Not really related, but a good thing to hear a text book say,